LightOn || Google Scholar || LinkedIn ||@IgorCarron ||Homepage||ArXiv

Page Views on Nuit Blanche since July 2010

LightOn's Newsletter ||@LightOnIO (1162)||

LinkedIn (727)|| on CrunchBase || our Blog

Nuit Blanche community

@NuitBlog || Facebook (462) || Reddit (2452)

Compressive Sensing on LinkedIn (3967)

Advanced Matrix Factorization on Linkedin (1333)||

Nuit Blanche community

@NuitBlog || Facebook (462) || Reddit (2452)

Compressive Sensing on LinkedIn (3967)

Advanced Matrix Factorization on Linkedin (1333)||

Saturday, July 31, 2010

CS: Test videos of compressed sensing

I don't know the specifics of these tests but they seem to be using compressed sensing: If somebody know more about it, I look forward to hearing about them.

Friday, July 30, 2010

It depends on what the meaning of the word "is" is

as in "is an expert". I am sure Topsy's algorithm can be improved as I dump my rss feed directly onto my twitter feed. In the meantime, check:

CS: Compressive Sensing on Cleve's corner

The Fall 2010 issue of MathWorks News and Notes will include a Cleve's Corner colum entitled "Magic Reconstruction: Compressed Sensing". This program generates the figures for that article.

Thursday, July 29, 2010

Wednesday, July 28, 2010

CS: Miki Elad's Course on Sparse and Redundant Representation Modeling of Images

Miki Elad gave a course as part of the Park City (Utah) Graduate Summer School, organized by the Institute of Advanced Studies (IAS), Princeton on July 12-16 2010. This course (5 lectures) brings the core ideas and achievements made in the field of sparse and redundant representation modeling, with emphasis on the impact of this field to image processing applications. The five lectures (given as PPTX and PDF) are organized as follows:

The five lectures can be downloaded here (51 MB large).

- Lecture 1: The core sparse approximation problem and pursuit algorithms that aim to approximate its solution.

- Lecture 2: The theory on the uniqueness of the sparsest solution of a linear system, the notion of stability for the noisy case, guarantees for the performance of pursuit algorithms using the mutual coherence and the RIP.

- Lecture 3: Signal (and image) models and their importance, the Sparseland model and its use, analysis versus synthesis modeling, a Bayesian estimation point of view.

- Lecture 4: First steps in image processing with the Sparseland model - image deblurring, image denoising, image separation, and image inpainting. Global versus local processing of images. Dictionary learnong with the MOD and the K-SVD.

- Lecture 5: Advanced image processing: Using dicitonary learning for image and video denoising and inpainting, image scale-up using a pair of learned dictionaries, Facial image compression with the K-SVD.

The five lectures can be downloaded here (51 MB large).

Tuesday, July 27, 2010

A Unified Algorithmic Framework for Multi-Dimensional Scaling, Philosophy and the practice of Bayesian statistics

Here are some of the reading I took with me in a place away from the interwebs.

As we all know, many issues in machine learning depends crucially on an initial good distance measure, From Suresh's tweet, here is: A Unified Algorithmic Framework for Multi-Dimensional Scaling by Arvind Agarwal, Jeff M. Phillips, Suresh Venkatasubramanian. The abstract reads:

In this paper, we propose a unified algorithmic framework for solving many known variants of \mds. Our algorithm is a simple iterative scheme with guaranteed convergence, and is \emph{modular}; by changing the internals of a single subroutine in the algorithm, we can switch cost functions and target spaces easily. In addition to the formal guarantees of convergence, our algorithms are accurate; in most cases, they converge to better quality solutions than existing methods, in comparable time. We expect that this framework will be useful for a number of \mds variants that have not yet been studied.

Our framework extends to embedding high-dimensional points lying on a sphere to points on a lower dimensional sphere, preserving geodesic distances. As a compliment to this result, we also extend the Johnson-Lindenstrauss Lemma to this spherical setting, where projecting to a random $O((1/\eps^2) \log n)$-dimensional sphere causes $\eps$-distortion.

Philosophy and the practice of Bayesian statistics by Andrew Gelman, Cosma Rohilla Shalizi. The abstract reads:

A substantial school in the philosophy of science identifies Bayesian inference with inductive inference and even rationality as such, and seems to be strengthened by the rise and practical success of Bayesian statistics. We argue that the most successful forms of Bayesian statistics do not actually support that particular philosophy but rather accord much better with sophisticated forms of hypothetico-deductivism. We examine the actual role played by prior distributions in Bayesian models, and the crucial aspects of model checking and model revision, which fall outside the scope of Bayesian confirmation theory. We draw on the literature on the consistency of Bayesian updating and also on our experience of applied work in social science.Clarity about these matters should benefit not just philosophy of science, but also statistical practice. At best, the inductivist view has encouraged researchers to fit and compare models without checking them; at worst, theorists have actively discouraged practitioners from performing model checking because it does not fit into their framework.

Cosma wrote a small blog entry on his paper here.

Other items to read include:

* Paul Graham's The top idea in your mind

* From Suresh 's blog here are some interesting links: to read and ponder:

- Measure Concentration by Alexander Barvinok

- The Homepage of Nearest Neighbors and Similarity Search by Yury Lifshits and its successor page The Similarity Search Webpage maintained by Arnoldo Muller

Monday, July 26, 2010

It's not a light modulating device...

You know when you say that compressive measurements are universal because 10 years later they could be reconstructed in a different way, something deeper is at stake. See, the device that allows us to get these compressive measurements is not called a light modulating device or a rotating collection of masks, it's called a Randomized Carousel. ...Good luck at your next meeting.....

Mad Men ´The Carousel´ from Emilio on Vimeo.

Credit: AMC, Mad Men.

Mad Men ´The Carousel´ from Emilio on Vimeo.

Credit: AMC, Mad Men.

A Magic Trick Uncovered.

The trick ?

Add the top left number of each of the card chosen by the audience member who identified them as containing the number she had in mind.

Kudos to Laurent Jacques and an anonymous commenter on finding the solution.

It looks like this is a compressive sensing problem in the same way the 12-balls problem is. In effect, the results of the measurements are 0 (not there) or 1 (there) for each card (measurement). The solution is a whole lot simpler than most linear programming techniques for sure.

My take is the trick looks like magic because the measurement process is non-adaptive. The issue of the number of measurements is not even important. You could easily find that number through a bisection process i.e. by repeatedly halving a guess and it would also take five measurements (one less than the proposed solution) to find the culprit. Since these examples are probably a good way of introducing the subject of compressive sensing to a wider audience, I have made a list of these kinds of problems and their resolution in the CS Games page.

Sunday, July 25, 2010

These Technologies Do Not Exist: A Cloud Based Sun Imager (Imaging with Nature)

The idea is to use the ability to describe single clouds through different means (ground laser, balloons...) so that one can infer the way light from the sun is being scattered inside the cloud. This information pretty much defines a multiplexing operation of the light coming from different parts of the Sun. This random multiplexing can then be deconvoluted using techniques used in Compressive Sensing to get an image of the Sun. The technical difficulty is finding the connection between the knowledge of the cloud obtained from other means and the resolution of the reconstructed image of the Sun. A good mitigating factor is the slow speed of clouds and their relative frozen state.

Saturday, July 24, 2010

These Technologies Do Not Exist: Planet Sized Sensors

About a year ago, I mentioned the possible utilization of the Moon as a way of imaging the Earth. Let me be more precise: Five hundred years ago, Leonardo explained what the Earthshine was in the Codex Leicester (Sheet 2A, folio 2r), i.e. it is a reflection of the Earth on the Moon. While this is great news for astrophysicist that are testing their theories on how they can detect exo-planets [2], this Earth reflection should also be seen as a great opportunity for imaging Earth. The test done in [1] showed that you could indeed detect several chemical elements of the earth surface but that these contributions come in a very multiplexed manner because the Moon has a lot of mountains/craters.From [1]:

An alternative method to obtain the Earth’s disk-averaged spectrum consists of taking a spectrum of the Moon Earthshine, i.e. Earth light backscattered by the non-sunlit Moon. A spectrum of the Moon Earthshine directly gives a disk-averaged spectrum of the Earth at a given phase as seen from the Moon (since the Moon surface roughness “washes out” any spatial information on the Earth’s colour).

The point I am making is that the need to quantify this "washing out of spatial information" comes primarily from people whose goal is to detect chemical elements on exo-planets first. To them, spatial information , while interesting, is not likely to be of concern for a little while (detection limit of sensors) since in Exo-planet detection, the topography of the planet is unknown.

In our case, however, because of satellites orbiting that Moon for the past 40 years, we have a pretty good idea of the topography of that celestial body (1 meter resolution) and have a reasonable understanding of the reflectance properties of the soil. Because the mixing of the rays of light is randomized by the Moon terrain, we know from compressive sensing that if we know the mixing matrix, we could in effect deconvolute the light signal in order to produce spatial information not unlike in the case of the Random Lens Imager.In other words, the Moon terrain acts as a random mirror of sorts.. Now the big question is: what type of image resolution of Earth could we get out of our current knowledge of the Moon terrain ? While some folks are not interested in miles (km) size resolution, other folks are. The possibility of doing such an experiment would require specialists of the Moon optical and compressed sensing to be together in the same room (funded). Since it is difficult to estimate the resolution that could be obtain from such a scheme I doubt that any research of this kind is going to be funded and or even exist in the near future.

[Update: Yesterday, Researchers in Paris published on observing the Earth atmosphere bouncing off the Moon in a Moon eclipse, in the press release one can read:

....Using SOPHIE, a high-resolution spectrograph attached to the Observatoire de Haute Provence's 1.93-meter telescope in southern France, the researchers successfully detected ozone, oxygen, nitrogen, and sodium in the reflected light from Earth's atmosphere. "The surprise was that we succeeded with extremely sparse observations under relatively bad weather conditions," Vidal-Madjar says. "But seeing how easily oxygen was seen strongly argues in favor of high-spectral-resolution searches [of Earthlike extrasolar planets]." The team reports its findings in a paper accepted for publication in Astronomy & Astrophysics...

The paper is The Earth as an extrasolar transiting planet: Earth's atmospheric composition and thickness revealed by Lunar eclipse observations on arxiv.and one can see the poor spatial resolution (two points) used in the paper in the following figure

][1] Biomarkers in disk-averaged near-UV to near-IR Earth spectra using Earthshine observations, S. Hamdani, L. Arnold, C. Foellmi, J. Berthier, M. Billeres, D. Briot, P. François, P. Riaud, and J. Schneider

[2] Earthshine Observation of Vegetation and Implication for Life Detection on Other Planets: A Review of 2001–2006 Works by Luc Arnold. The very insightful presentation for this work can be found here and here.

[ Other Technologies that do not exist but could be enabled through compressive sensing can be found here]

[ Other Technologies that do not exist but could be enabled through compressive sensing can be found here]

Friday, July 23, 2010

CS: N900 Computational Photography, A Review of Fast l1-Minimization Algorithms for Robust Face Recognition, Latest news on Compressive and Compressed Sensing

As I am away, the latest news on Compressive and Compressed Sensing can be found in the links found at the end of this entry. In the meantime, two news:

Camera 2.0: New computing platforms for computational photography. The Nokia900 can be used for computational photography. From the site that made it happen:

woohoo.

Q&As

MathOverflow:

MetaOptimize:

LinkedIn:

TheoreticalCS:(not yet working)

BioStar

Friendfeed/Twitter

Camera 2.0: New computing platforms for computational photography. The Nokia900 can be used for computational photography. From the site that made it happen:

From the same project, one can read: The Frankencamera: An Experimental Platform for Computational Photography by Andrew Adams, Eino-Ville (Eddy) Talvala, Sung Hee Park, David E. Jacobs, Boris Ajdin, Natasha Gelfand, Jennifer Dolson, Daniel Vaquero, Jongmin Baek,Marius Tico, Henrik P.A. Lensch, Wojciech Matusik, Kari Pulli, Mark Horowitz, Marc LevoyWe've released the FCam API for the Nokia N900 smartphone, along with some example applications. This is the same API we use to control the Frankencamera F2 (shown above). Now you can make your N900's camera programmable (without bricking the phone)! Go here to get started....On July 21, 2010 we released the source code for our N900 implementation. A binary executable was also released, which can be downloaded to any retail N900 (without bricking the phone), thereby making its camera programmable. We also plan to make the more powerful Frankencamera available to the international research and teaching community at minimal cost, but we don't yet have timetable for this release. When we release it, distribution to universities in the U.S. will be free, financed by a grant from the National Science Foundation. The Camera 2.0 project, which began as a collaboration between the Stanford Computer Graphics Laboratory and the Nokia Research Center Palo Alto Laboratory, also receives funding from Adobe Systems, Kodak, Hewlett-Packard, and the Walt Disney Company. The NSF portion of the project is in collaboration with Fredo Durand and William Freeman of MIT.

woohoo.

A Review of Fast l1-Minimization Algorithms for Robust Face Recognition by Allen Y. Yang, Arvind Ganesh, Zihan Zhou, S. Shankar Sastry, Yi Ma. The abstract reads:

l1-minimization refers to finding the minimum l1-norm solution to an underdetermined linear system b=Ax. It has recently received much attention, mainly motivated by the new compressive sensing theory that shows that under quite general conditions the minimum l1-norm solution is also the sparsest solution to the system of linear equations. Although the underlying problem is a linear program, conventional algorithms such as interior-point methods suffer from poor scalability for large-scale real world problems. A number of accelerated algorithms have been recently proposed that take advantage of the special structure of the l1-minimization problem. In this paper, we provide a comprehensive review of five representative approaches, namely, Gradient Projection, Homotopy, Iterative Shrinkage-Thresholding, Proximal Gradient, and Augmented Lagrange Multiplier. The work is intended to fill in a gap in the existing literature to systematically benchmark the performance of these algorithms using a consistent experimental setting. In particular, the paper will focus on a recently proposed face recognition algorithm, where a sparse representation framework has been used to recover human identities from facial images that may be affected by illumination, occlusion, and facial disguise. MATLAB implementations of the algorithms described in this paper have been made publicly available.

The sites where the benchmarks are located is here. From the page:

Now on to the sites to check for any news on compressive sensing, here is the (incomplete list).

The package contains a consolidated implementation of seven l-1 minimization algorithms in MATLAB. Each function uses a consistent set of parameters (e.g., stopping criterion and tolerance) to interface with our benchmark scripts.

- Orthogonal Matching Pursuit: SolveOMP.m

- Primal-Dual Interior-Point Method: SolveBP.m

- Gradient Projection: SolveL1LS.m

- Homotopy: SolveHomotopy.m

- Polytope Faces Pursuit: SolvePFP.m

- Iterative Thresholding: SolveSpaRSA.m

- Proximal Gradient: SolveFISTA.m

- Primal Augmented Lagrange Multiplier: SolvePALM.m

- Dual Augmented Lagrange Multiplier: SolveDALM.m; SolveDALM_fast.m

Now on to the sites to check for any news on compressive sensing, here is the (incomplete list).

- Arxiv

- Google (Compressive Sensing / Compressed Sensing) 24 hours, week, month.

- Rice University Compressive Sensing repository

Q&As

MathOverflow:

MetaOptimize:

LinkedIn:

TheoreticalCS:(not yet working)

BioStar

Friendfeed/Twitter

- Compressed Sensing and Compressive Sensing in FriendFeed

- Compressed Sensing, Compressive Sensing on Twitter.

A Magic Trick

What aspect of Compressive Sensing or Group Testing is most magical or surprising ?

In the cards shown below, the magician asks a person in the audience to choose a number between 1 and 63 and ask her to keep it in her head. The magician then proceeds and asks the spectator which card holds that number. As soon as the six cards have been queried, the magician knows exactly what the initial number is. How does she do it ?

Answer on Monday. Have a good week-end!

If you think this blog provides a service, please support it by ordering through the Amazon - Nuit Blanche Reference Store

In the cards shown below, the magician asks a person in the audience to choose a number between 1 and 63 and ask her to keep it in her head. The magician then proceeds and asks the spectator which card holds that number. As soon as the six cards have been queried, the magician knows exactly what the initial number is. How does she do it ?

Answer on Monday. Have a good week-end!

If you think this blog provides a service, please support it by ordering through the Amazon - Nuit Blanche Reference Store

Thursday, July 22, 2010

CS: Bacterial Community Reconstruction Using A Single Sequencing Reaction and LinkedIn Group management.

I'll be away from the interwebs for the next few weeks. Alejandro Weinstein, Ravi Kiran B., Sri Hari B. H., Thomas Arildsen and I are now co-managers of the LinkedIn Compressive Sensing group which means there should not be any disruption in terms of accepting new members to the group as managers now cover the main regions of the world (Asia, America, Europe). The group now counts more than 523 members, who will be the 1000th ?

I featured their work on Monday with Comseq, they are now coming up with a new preprint and give a new meaning to BCS: it's not Bryan-College-Station, nor Bayesian Compressive Sensing, it's Bacterial Compressed Sensing, a whole new program....Bacterial Community Reconstruction Using A Single Sequencing Reaction by Amnon Amir, Or Zuk. The abstract reads:

The attendant matlab BCS code is here.

Bacteria are the unseen majority on our planet, with millions of species and comprising most of the living protoplasm. While current methods enable in-depth study of a small number of communities, a simple tool for breadth studies of bacterial population composition in a large number of samples is lacking. We propose a novel approach for reconstruction of the composition of an unknown mixture of bacteria using a single Sanger-sequencing reaction of the mixture. This method is based on compressive sensing theory, which deals with reconstruction of a sparse signal using a small number of measurements. Utilizing the fact that in many cases each bacterial community is comprised of a small subset of the known bacterial species, we show the feasibility of this approach for determining the composition of a bacterial mixture. Using simulations, we show that sequencing a few hundred base-pairs of the 16S rRNA gene sequence may provide enough information for reconstruction of mixtures containing tens of species, out of tens of thousands, even in the presence of realistic measurement noise. Finally, we show initial promising results when applying our method for the reconstruction of a toy experimental mixture with five species. Our approach may have a potential for a practical and efficient way for identifying bacterial species compositions in biological samples.

Wednesday, July 21, 2010

Around the blogs in 80 hours, SIAM prizes.

Here are the entries for this week:

- James Lee: The Majorizing Measures Theorem

- Bob Sturm:

- Interesting Post-doc Opportunity,

- Paper of the Day (Po'D): Bayesian Pursuit Algorithm for Sparse Representation Edition

- Terry Tao:The Golden-Thompson inequality

- Alex Gittens: Online note taking?

- Gonzalo Vazquez Vilar: Cognitive Radio in arXiv.org

- Andrew MvGregor: Bite-sized streams: Random Walks

- Frank Nielsen: ISVD 2010: Voronoi diagrams

- Anand Sarwate: David Blackwell has passed away

- Andrew Gelman: David Blackwell

- Laurent Duval: Upcoming SIVA signal and image conferences - Concern fees and unrelated to the themes of Nuit Blanche Aleks Jakulin: Demographics: what variable best predicts a financial crisis?

George Pólya Prize

- Emmanuel Candès, Stanford University

- Terence Tao, University of California, Los Angeles

SIAM Student Paper Prize

Congratulations to the Emmanuel, Terry and Bubacarr !

- Bubacarr Bah, University of Edinburgh, United Kingdom

Tuesday, July 20, 2010

CS: Nonuniform Sparse Recovery with Gaussian Matrices and two questions

Looks like we are going to have a formula for one of the Donoho-Tanner Phase Transition in Nonuniform Sparse Recovery with Gaussian Matrices by Ulaş Ayaz, Holger Rauhut. The abstract reads:

Compressive sensing predicts that sufficiently sparse vectors can be recovered from highly incomplete information. Efficient recovery methods such as $\ell_1$-minimization find the sparsest solution to certain systems of equations. Random matrices have become a popular choice for the measurement matrix. Indeed, near-optimal uniform recovery results have been shown for such matrices. In this note we focus on nonuniform recovery using Gaussian random matrices and $\ell_1$-minimization. We provide a condition on the number of samples in terms of the sparsity and the signal length which guarantees that a fixed sparse signal can be recovered with a random draw of the matrix using $\ell_1$-minimization. The constant $2$ in the condition is optimal, and the proof is rather short compared to a similar result due to Donoho and Tanner.

On MathOverflow the following question was asked:

I'm wondering if compressed sensing can be applied to a problem I have in the way I describe, and also whether it should be applied to this problem (or whether it's simply the wrong tool).

I have a large network and plenty of data on each node. I want to find a set of communities that explain most data similarity and track these communities over time. In a compressed sensing formulation this amounts to the following:

-My graph's representation basis is a weighted set of communities, where each community is a subset of the set of all nodes (candidate communities can be narrowed down to a tractable number rather easily)

-Different feature measures (e.g. bigrams, topic profiles) serve as my sensing basis, with correlations between community membership and features serving as the coefficients of my measurement matrix. The big assumptions, that my feature measurements have the Restricted Isometry Property, that similarity is incoherent with community, and that similarity is a linear combination of community, are all almost certainly incorrect, however they seem plausible approximations to within (possibly significant) noise.

Ideally, I can use this strategy to describe my network as a collection of communities and to track over time the prominence of these communities. I wonder, however, if there isn't some straightforward bayesian method that I'm overlooking.

Misc Questions about Compressed Sensing:

i) If my measurements are not linear combinations of my representation basis, but at least convex, then can I still usefully use compressed sensing?

ii) What is the meaning of the dual of basis pursuit?

iii) How does one avoid basis mismatch in general? You choose your representation basis elements beforehand, so how do you make sure they're capable of representation?

Perhaps the answer to this question is obvious. Nonetheless, I would like to pose the following question:

Suppose we are presented with an image that has been corrupted by the addition of some small amount of white Gaussian noise. Further suppose that we also have available a uniformly random sub-sampling of the original uncorrupted image. The problem is to recover the original image, in its entirety.

Ignoring the trivial boundary cases, does a solution exist; if so, does it map directly to a known method?

At first glance it looks like a variation on the Matrix Completion problem, but the circumstances seem to suggest the existence of a more computationally efficient approach. On the other hand, it occurs to me that we are, effectively, asking to complete the additive noise matrix, which is by no means sparse.

Can anyone enlighten me in this regard?

Monday, July 19, 2010

CS: ComSeq, Simulating and analyzing compressed-sensing pooling design experiments for next-generation sequencing projects

In a previous entry , I mentioned the paper entitled Rare-Allele Detection Using Compressed Se(que)nsing by Noam Shental, Amnon Amir and Or Zuk.Well the project seems to have produced a set of utilities called ComSeq. From the site:

Overview

ComSeq is a set of utilities used for simulating and analyzing compressed-sensing pooling design experiments for next-generation sequencing projects. The aim is to identify novel rare alleles, or detect individuals who are carriers of known SNPs in a large population. The package enables an in-silico simulation of a sequencing pooling experiments, including modeling of sequencing error parameters. The package also enables reconstructing genotypes of individuals profiled in real experiments. Detailed instructions and usage examples are given in the README file.

About

ComSeq is a collaboration between the Broad Institute, the Open University of Israel , and the Weizmann Institute of Science. If you use the package please cite the following paper:

Identification of Rare-Alleles And Their Carriers Using Compressed Se(que)nsing, N. Shental, A. Amir and O. Zuk (2010)

ComSeq Installation

ComSeq includes a set of Matlab functions which can be individually used. You can download the whole package, or individual functions below. To install the package simply download the file comseq.tgz to a directory of your choice, extract it, and add to your Matlab path. The package uses the GPSR algorithm. We thank Mário Figueiredo for allowing us to include it in this package.

Sunday, July 18, 2010

CS: These Technologies Do Not Exist: Time Modulated Photo-Multiplier Tubes

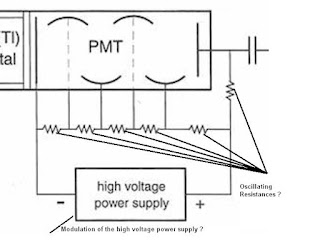

As an addendum to yesterday's entry on Compressive MultiChannel Analyzers, one couldd also think of a way to do some type of time multiplexing of the Photo-Multiplier Tubes (PMT). The multiplexing could be performed by oscillating resistances between the dynodes or by modulating the power supply of the PMT..

Why would you want to have a technology like this one ? At the very least it could provide some rapid detection of abnormal events from a steady state background. This modulation could also be undertaken in concert with a modulation of the Random Anger Coding Scheme or the Random X-ray Collector mentioned earlier.

This entry will be added to the "These Technologies Do Not Exist" page.

Saturday, July 17, 2010

CS: These Technologies Do Not Exist: Compressive MultiChannel Radiation Analyzers

Radiation detectors are the basis of many medical imaging techniques, yet I believe not much attention is being paid to them in terms of rethinking the way they are designed to include the new techniques developed on the compressive sensing framework. Let me give you an simple example. But first before thinking about a new technology, one really needs to see what has been done before. Probably not the extent of being an expert but at least to the point where one gets a sense of the gist of what is being performed. I am taking some of the figures from this presentation on Radiation Detection & Measurement II, Pulse height spectroscopy which takes most of its material from The Essential Physics of Medical Imaging by Jerrold Bushberg. As a nuclear engineer, one of the textbook I had and can recommend is Radiation Detection and Measurement by Glenn Knoll (the errata sheet is here ). If you recall I asked Glenn two years ago about coded aperture ( CS: Coded Mask Imagers: What are they good for ? The George Costanza "Do the Opposite" Sampling Scheme). These two books are a good starting point and provide a very good shelf book for practicing engineers and physicists alike. They definitely provide a good vocabulary if you end up talking to a radiation detector designer.

by Glenn Knoll (the errata sheet is here ). If you recall I asked Glenn two years ago about coded aperture ( CS: Coded Mask Imagers: What are they good for ? The George Costanza "Do the Opposite" Sampling Scheme). These two books are a good starting point and provide a very good shelf book for practicing engineers and physicists alike. They definitely provide a good vocabulary if you end up talking to a radiation detector designer.

One of the simplest configuration for detecting radiation is detecting Gamma ray photons. As shown in the figure below, a photon source provides undirected gammas which interact with the NaI(TI) material which will transform a high energy photon (gamma) into a photon in the visible light range that can be converted into an electron and then into a electrical current.

One also notices that the gamma rays also interact with the rest of the detector. Some of the interaction processes were already described in These Technologies Do Not Exist: A Random Anger Coding Scheme for PET/SPECT cameras entry. The figure below lists all kinds of interactions the gamma source eventually have with the detector (A, B, C, D, E, F):

Specifically as mentioned in the text of the presentation and the associated book:

Interactions of photons with a spectrometer

•An incident photon can deposit its full energy by:

–A photoelectric interaction (A)

–One or more Compton scatters followed by a photoelectric interaction (B)

•A photon will deposit only a fraction of its energy if it interacts by Compton scattering and the scattered photon escapes the detector (C)

–Energy deposited depends on scattering angle, with larger angle scatters depositing larger energies

Even if the incident photon interacts by the photoelectric effect, less than its total energy will be deposited if the inner-shell electron vacancy created by the interaction results in emission of a characteristic x-ray that escapes the detector (D)

Detectors normally shielded to reduce effects of natural background radiation and nearby radiation sources

•An x-ray or gamma-ray may interact in the shield of the detector and deposit energy in the detector:

–Compton scatter in the shield, with the scattered photon striking the detector (E)

–A characteristic x-ray from the shield may interact with the detector (F)

In the end, the spectrogram for this source is shown in its ideal form on the left hand side of the next figure and its real shape in the real configuration.

In other words, the two diracs have now been transformed into a much smoother function as a result of the interaction of the source with the detector. It so happens that the past 60-70 years, most detectors have been built in order to get the real figure (on the right) as close as possible to the left one. In effect, any new improvement of the technology (for instance replacing the NaI material with Silicon Drift Detectors (SDD) as in HICAM) is trying to make the right peak as close to a dirac as possible ( I am sure some of you are already seeing where I am going to say but nevertheless let us continue.) How bad is the interaction with the rest of the detector ? It can be made very bad, as shown in the figure below where the difference between the two spectra is directly dependent on the shape of the container surrounding the detector.

Another important characteristics of these sensors is that in order to provide spectral resolution, a gating system is put in place. The result of these spectra is generally given on Multi Channel Analyzers (MCA) that are natural extension of Single Channel Analysers (SCA) shown below.

Given all this background, what would look like a Compressive MultiChannel Analyzer ? and what would be its purpose compared to current established technology ?

A natural compressive sensing approach to this problem would probably revolve around a stronger smoothing of the real dirac figure making the detector measurement a direct incoherent measurement of the spectrum. How would we go about this ? After some thoughts, there are probably two paths to be investigated:

- change the arrangement of the material in front of the detector so that more Compton scattering occur (recall in current radiation detector technology we want to so the opposite of that)

- change the \Delta E so that it is a random set as opposed to a unique value.

By changing the structure of the material in front of the detector, this new type of detector may be providing directional sensitivity for a similar energy resolution. By changing the \Delta E, one might also provide a better control over the noise of the scattered signal. A way to investigate all this phase space would be to use some forward modeling using codes like MCNP or GEANT. After having created a concept, one can check the design using the Random coding for forward modeling as recently presented by Justin Romberg recently. This entry will be added to the These Technologies Do Not Exist page.

Friday, July 16, 2010

A snapshot of the most viewed presentations at MMDS 2010 in a 12 hour timeline.

I looked at the exit pages statistics of the Full set of presentations made at the 2010 Modern Massive Data Sets (MMDS 2010) over a period of 12 hours, here is what has interested you the most (please note that the LASTNAME file is a placeholder for either Mauro Maggioni's or Alon Orlitsky's presentations that are missing):

Subscribe to:

Posts (Atom)